Software Testing Metrics

Increase in competition and leaps in technology have forced companies to adopt innovative approaches to assess themselves with respect to processes, products and services. This assessment helps them to improve their business so that they succeed and make more profits and acquire higher percentage of market.

Metric is the cornerstone in assessment and also foundation for any business improvement

Metric is the cornerstone in assessment and also foundation for any business improvement

2. Software Metrics

Metric is a standard unit of measurement that quantifies results. Metric used for evaluating the software processes, products and services is termed as Software Metrics.

Definition of Software Metrics given by Paul Goodman: -

Software Metrics is a Measurement Based Technique which is applied to processes, products and services to supply engineering and management information and working on the information supplied to improve processes, products and services, if required.

3. Importance of Metrics

- Metrics is used to improve the quality and productivity of products and services thus achieving Customer Satisfaction.

- Easy for management to digest one number and drill down, if required.

- Different Metric(s) trend act as monitor when the process is going out-of-control.

- Metrics provides improvement for current process.

4. Point to remember

- Metrics for which one can collect accurate and complete data must be used.

- Metrics must be easy to explain and evaluate.

- Benchmark for Metric(s) varies form organization to organization and also from person to person.

5. Metrics Life-cycle

The process involved in setting up the metrics:

6. Type of Software Testing Metrics

Based on the types of testing performed, following are the types of software testing metrics: -

1. Manual Testing Metrics

2. Performance Testing Metrics

3. Automation Testing Metrics

Based on the types of testing performed, following are the types of software testing metrics: -

1. Manual Testing Metrics

2. Performance Testing Metrics

3. Automation Testing Metrics

Following figure shows different software testing metrics.

Let’s have a look at each of them.

6.1 Manual Testing Metrics

6.1 Manual Testing Metrics

6.1.1 Test Case Productivity (TCP)

This metric gives the test case writing productivity based on which one can have a conclusive remark.

One can compare the Test case productivity value with the previous release(s) and draw the most effective conclusion from it.

TC Productivity Trend

6.1.2 Test Execution Summary

This metric gives classification of the test cases with respect to status along with reason, if available, for various test cases. It gives the statical view of the release. One can collect the data for the number of test case executed with following status: -

This metric gives classification of the test cases with respect to status along with reason, if available, for various test cases. It gives the statical view of the release. One can collect the data for the number of test case executed with following status: -

- Pass.

- Fail and reason for failure.

- Unable to Test with reason. Some of the reasons for this status are time crunch, postponed defect, setup issue, out of scope.

Summary Trend

One can also show the same trend for the classification of reasons for various unable to test and fail test cases.

6.1.3 Defect Acceptance (DA)

This metric determine the number of valid defects that testing team has identified during execution.

The value of this metric can be compared with previous release for getting better picture

Defect Acceptance Trend

This metric determine the number of defects rejected during execution.

It gives the percentage of the invalid defect the testing team has opened and one can control, whenever required.

Defect Rejection Trend

6.1.5 Bad Fix Defect (B)

Defect whose resolution give rise to new defect(s) are bad fix defect.

This metric determine the effectiveness of defect resolution process.

Defect whose resolution give rise to new defect(s) are bad fix defect.

This metric determine the effectiveness of defect resolution process.

It gives the percentage of the bad defect resolution which needs to be controlled.

Bad Fix Defect Trend

Bad Fix Defect Trend

6.1.6 Test Execution Productivity (TEP)

This metric gives the test cases execution productivity which on further analysis can give conclusive result.

This metric gives the test cases execution productivity which on further analysis can give conclusive result.

Test Execution Productivity Trend

This metric determine the efficiency of the testing team in identifying the defects.

It also indicated the defects missed out during testing phase which migrated to the next phase.

It also indicated the defects missed out during testing phase which migrated to the next phase.

Where,

DT = Number of valid defects identified during testing.

DU = Number of valid defects identified by user after release of application. In other words, post-testing defect

DT = Number of valid defects identified during testing.

DU = Number of valid defects identified by user after release of application. In other words, post-testing defect

Test Efficiency Trend

6.1.8 Defect Severity Index (DSI)

This metric determine the quality of the product under test and at the time of release, based on which one can take decision for releasing of the product i.e. it indicates the product quality.

One can divide the Defect Severity Index in two parts: -

1. DSI for All Status defect(s): - This value gives the product quality under test.

1. DSI for All Status defect(s): - This value gives the product quality under test.

2. DSI for Open Status defect(s): - This value gives the product quality at the time of release. For calculation of DSI for this, only open status defect(s) must be considered.

From the graph it is clear that

- Quality of product under test i.e. DSI – All Status = 2.8 (High Severity)

- Quality of product at the time of release i.e. DSI – Open Status = 3.0 (High Severity)

6.2 Performance Testing Metrics

6.2.1 Performance Scripting Productivity (PSP)

This metric gives the scripting productivity for performance test script and have trend over a period of time.

Where Operations performed is: -

1. No. of Click(s) i.e. click(s) on which data is refreshed.

2. No. of Input parameter

3. No. of Correlation parameter

Above evaluation process does include logic embedded into the script which is rarely used.

Example

Efforts took for scripting = 10 hours.

Performance scripting productivity =20/10=2 operations/hour

Performance Scripting Productivity Trend

6.2.1 Performance Scripting Productivity (PSP)

This metric gives the scripting productivity for performance test script and have trend over a period of time.

1. No. of Click(s) i.e. click(s) on which data is refreshed.

2. No. of Input parameter

3. No. of Correlation parameter

Above evaluation process does include logic embedded into the script which is rarely used.

Example

Efforts took for scripting = 10 hours.

Performance scripting productivity =20/10=2 operations/hour

Performance Scripting Productivity Trend

6.3.2 Automation Test Execution Productivity (AEP)

This metric gives the automated test case execution productivity.

Evaluation process is similar to Manual Test Execution Productivity.

6.3.3 Automation Coverage

This metric gives the percentage of manual test cases automated.

Example

Example

If there are 100 Manual test cases and one has automated 60 test cases then Automation Coverage = 60%

6.3.4 Cost Comparison

This metrics gives the cost comparison between manual testing and automation testing. This metrics is used to have conclusive ROI (return on investment).

Manual Cost is evaluated as: -

Automation cost is evaluated as: -

Cost (A) = Tool Purchased Cost (One time investment) + Maintenance Cost

+ Script Development Cost

+ (Execution Efforts (hrs) * Billing Rate)

If Script is re-used the script development cost will be the script update cost.

Using this metric one can have an effective conclusion with respect to the currency which plays a vital role in IT industry.

6.4 Common Metrics for all types of testing

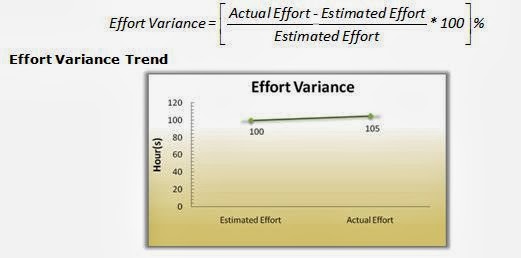

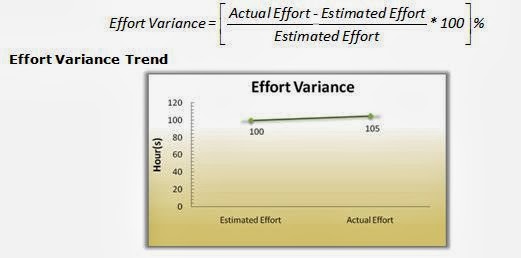

6.4.1 Effort Variance (EV)

This metric gives the variance in the estimated effort.

6.4.2 Schedule Variance (SV)

This metric gives the variance in the estimated schedule i.e. number of days.

6.4.3 Scope Change (SC)

This metric indicates how stable the scope of testing is.

7.0 Conclusion

Metric is the cornerstone in assessment and foundation for any business improvement. It is a Measurement Based Technique which is applied to processes, products and services to supply engineering and management information and working on the information supplied to improve processes, products and services, if required. It indicates level of Customer satisfaction, easy for management to digest number and drill down, whenever required and act as monitor when the process is going out-of-control.

Following table summarize the Software testing metrics discussed in this paper:

This metric gives the automated test case execution productivity.

Evaluation process is similar to Manual Test Execution Productivity.

6.3.3 Automation Coverage

This metric gives the percentage of manual test cases automated.

If there are 100 Manual test cases and one has automated 60 test cases then Automation Coverage = 60%

6.3.4 Cost Comparison

This metrics gives the cost comparison between manual testing and automation testing. This metrics is used to have conclusive ROI (return on investment).

Manual Cost is evaluated as: -

Cost (M) = Execution Efforts (hours) * Billing Rate

Cost (A) = Tool Purchased Cost (One time investment) + Maintenance Cost

+ Script Development Cost

+ (Execution Efforts (hrs) * Billing Rate)

If Script is re-used the script development cost will be the script update cost.

Using this metric one can have an effective conclusion with respect to the currency which plays a vital role in IT industry.

6.4 Common Metrics for all types of testing

6.4.1 Effort Variance (EV)

This metric gives the variance in the estimated effort.

6.4.2 Schedule Variance (SV)

This metric gives the variance in the estimated schedule i.e. number of days.

6.4.3 Scope Change (SC)

This metric indicates how stable the scope of testing is.

7.0 Conclusion

Metric is the cornerstone in assessment and foundation for any business improvement. It is a Measurement Based Technique which is applied to processes, products and services to supply engineering and management information and working on the information supplied to improve processes, products and services, if required. It indicates level of Customer satisfaction, easy for management to digest number and drill down, whenever required and act as monitor when the process is going out-of-control.

Following table summarize the Software testing metrics discussed in this paper:

Test Metric

|

Description

|

Manual Testing Metrics

|

|

Test

Case Productivity

|

Provides

the information for the number of step(s) written per hour.

|

Test

Execution Summary

|

Provides

statical view of execution for the release along with status and reason.

|

Defect

Acceptance

|

Indicates

the stability and reliability of the application.

|

Defect

Rejection

|

Provides

the percentage of invalid defects.

|

Bad

Fix Defect

|

Indicates

the effectiveness of the defect-resolution process

|

Test

Execution Productivity

|

Provides

detail of the test case executed per day.

|

Test

Efficiency

|

Indicates

the testing capability of the tester in identifying the defect.

|

Defect

Severity Index

|

Provides

indications about the quality of the product under test and at the time of

release.

|

Performance Testing Metrics

|

|

Performance

Scripting Productivity

|

Provides

scripting productivity for performance test flow.

|

Performance

Execution Summary

|

Provides

classification with respect to number of test conducted along with status

(Pass/Fail), for various types of performance testing.

|

Performance

Execution Data - Client Side

|

Gives

the detail information of Client side data for execution

|

Performance

Execution Data - Server Side

|

Gives

the detail information of Server side data for execution

|

Performance

Test Efficiency

|

Indicates

the quality of the Performance team in meeting the performance requirement(s).

|

Performance

Severity Index

|

Indicates

quality of product under test with respect to performance criteria.

|

Automation Testing Metrics

|

|

Automation

Scripting Productivity

|

Indicates

the scripting productivity for automation test script.

|

Auto.

Execution Productivity

|

Provides

execution productivity per day.

|

Automation

Coverage

|

Gives

percentage of manual TC automated

|

Cost

Comparison

|

Provides

information on ROI

|

Common metrics for all types

of testing

|

|

Effort

Variance

|

Indicates

effort stability

|

Schedule

Variance

|

Indicates

schedule stability

|

Scope

Change

|

Indicates

requirement stability

|

Thus, Metrics help organization to obtain the

information it needs to continue to improve

its processes, products and services and achieve the desired Goal as:

"You cannot control what you cannot measure"

(Tom DeMarco)

No comments:

Post a Comment